Section 4.2 Network Architecture

As you study this section, answer the following questions:

- How can a virtual router be used to increase network security?

- What is the difference between virtual desktop infrastructure (VDI) and containerization?

- How can virtualization save money?

- What are some benefits of cloud computing?

- What are four types of cloud services deployment?

- What are the benefits and risks of serverless computing?

- How does software-defined networking segmentation create a more secure environment?

- What trends are driving the need for Zero Trust architecture?

The key terms for this section include:

| Term | Definition |

|---|---|

| Virtualization | A technology that creates a software-based, virtual representation of a system component. The virtual component can be an application, network interface card (NIC), server, storage, etc. |

| Virtual network | A computer network consisting of virtual and physical devices. |

| Cloud computing | A combination of software, data access, computation, and storage services provided to clients through the internet. |

| Serverless computing | A method of cloud computing execution where the backend infrastructure is provided to developers on an as-needed basis. The cloud service providers manage the servers so the developers can focus on creating and deploying the code they need. |

| Software-defined networking (SDN) | A network architecture design that separates the control plane from the data plane through a virtual control plane that makes all traffic management decisions. |

| Zero Trust architecture | A network architecture design based on the principle of least privilege and continuous access verification. |

This section helps you prepare for the following certification exam objectives:

| Exam | Objective |

|---|---|

| CompTIA CySA+ CS0-003 |

1.4 Compare and contrast threat-intelligence and threat-hunting concepts

|

| TestOut CyberDefense Pro | 1.1 Monitor networks

3.1 Implement security controls to mitigate risk

|

4.2.1 Virtualization Management Overview

Click one of the buttons to take you to that part of the video.

Virtualization Management Overview 00:00-00:22 Let's talk a bit about virtualization.

Organizations generally use virtual devices to save money and to simplify management tasks.

For example, when a company uses fewer hardware devices, they save money on hardware costs.

Let's take a closer look at how virtualization is used.

Virtualization 00:22-01:26 Hardware virtualization lets you run multiple computers, known as virtual machines or VMs, on a single physical device called a host.

When you do this, each independent VM runs its own operating system and applications, and thinks it has its own hardware resources. However, each VM is sharing the hardware resources with the host.

A common implementation of this type of virtualization is used with servers, letting a company consolidate many servers onto one physical host.

This means, that instead of having many underutilized servers in a traditional networking model, with virtualization you can fully utilize the CPU, RAM, disks, and other network subsystems on the host on which the VMs are running.

Another example of this is to virtualize desktops. This is often used by individuals that need to run several machines on their own computer for testing or development purposes.

Some of the advantages of using virtualization is that it can increase IT agility, allow for greater flexibility, deliver increased performance, and can let you scale up quickly while enjoying significant cost savings.

Virtual Desktop Infrastructure 01:26-02:35 Another type of virtualization is known as Virtual Desktop Infrastructure, or VDI.

Similar to what we've just discussed, VDI is used to virtualize desktop computers on a remote host.

However, with VDI, the VMs are created with the intent of being the end-user's main computer, or a temporary computer, which can be accessed from any location or computer, such as a tablet or thin client.

Since these VMs are running remotely, all the processing is done on the host computer.

These VMs instances are accessed using a connection broker. This broker is typically a software-based gateway that acts like a traffic cop to control session access between the end-users and the VDI VMs.

Since these VDI VMs are running on a remote host, it means that you not only can access them remotely, but that they are easier to backup, support, and troubleshoot.

It also means that since these VMs can be access from an inexpensive thin client, there's no need to buy expensive desktop computers for each employee.

Security is another major benefit of using VDI. Since all the data reside on the remote host data can be better protected, even if the user's device is compromised or stolen.

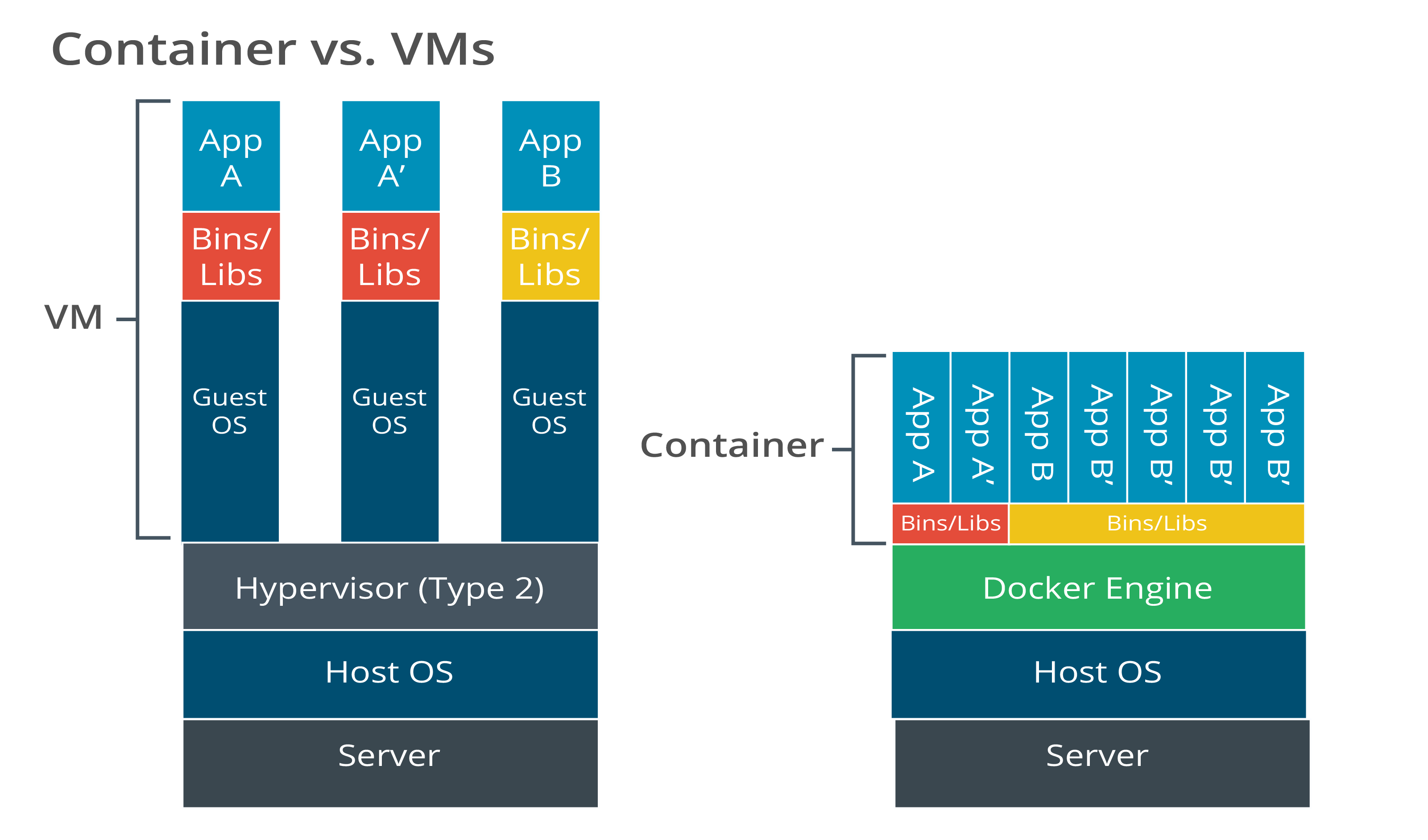

Containerization 02:35-03:48 Another type of virtualization is known as Containerization.

Containerization is the process of virtualizing an application (or system) and its dependencies within a computer's own operating system instead of virtualizing whole machine. In essence, the application and its dependencies are being run from a container.

Each of these containers includes and isolates all the runtime components needed to run the application. This includes such things as the binaries and configuration files. This means you can run multiple containers, without depending on (or conflicting with) other applications using the same operating system.

For example, using this type of virtualization, you could create a container to run two different versions of the same application at the same time, since each acts independent of the other.

Containers are also portable, meaning once created, you can copy or move the container to another device.

Although several types of container virtualization has been around for many years, it has gained prominence with open-source Docker.

Other manufactures are also providing these types of containers, such as CoreOS's Rocket and Canonical's LXD containerization engine for Ubuntu.

Microsoft has also entered this field with products such as FSLogix and drawbridge.

Virtualization Security 03:48-04:37 As you've seen, virtual machines and containers use their host for processing, so it's important that the physical hardware should be well secured. If an attacker were to obtain access to a piece of hardware that houses numerous VMs or containers, they could potentially control or adversely affect all of them.

It's also important that you develop procedures for creating and managing your virtual systems.

Security procedures for virtual systems should be like those used for physical systems. In fact, it could be argued that security for VMs is even more important because VMs are created for easy replication so one bad configuration could be replicated across your network.

Virtual machines and virtual networks should be documented and should be included in the same network maps as the physical hardware. Internet-facing and internal services should not be deployed on the same physical device.

Virtualization Devices 04:37-06:37 Virtualization isn't limited to just computers and applications. Other devices, such as switches, and routers can also be virtualized.

A virtual switch, or vSwitch, performs the same functions as a physical switch which is to facilitate the communication between computers, but in this case, the computers are VMs.

The ability to create and manage vSwitches are often integrated with virtual machine software. Sometimes, they're even part of a server's firmware.

The nice thing about a vSwitch is that it's often much easier to implement and manage than a traditional switch. In addition, a vSwitch can ensure the integrity of virtual hosts, thereby creating a more secure network.

For example, a vSwitch could make sure that a VM meets certain security criteria before it can communicate on the virtual network. If it fails the security check, its communications will be blocked.

A virtual router, or vRouter, is a software function that replicates a physical router. The nice thing about a virtual router is that it doesn't need to rely on the IP routing functionality used by physical routers. This means you can move routing functions around a network freely, creating a more dynamic network environment.

When working with vSwitches and vRouters, make sure to test the capabilities of these virtual devices since they may perform differently than your physical networking devices.

Keep in mind that while virtual networking devices can be very flexible and offer a lot of benefits, they still aren't a full replacement for physical networking devices.

Because these components rely on software applications, they are susceptible to things like virtual machine escape.

This is where an attacker uses code that allows a program to break out of the virtual machine on which it's running and interacting with the host operating system.

As such, if your entire network is virtualized, there's no physical boundary between certain systems. With physically segmented systems, it is impossible for an attacker to leap between segments without physical access. With virtual segmentation, an attacker only needs to know of an exploit that allows the leap.

Summary 06:37-07:04 That's it for this lesson.

In this lesson we discussed what virtualization is, and how it can be used to help save time and money.

We also introduced you to Virtual Desktop Infrastructure, or VDI, and containerization.

Next, we covered a few important considerations regarding securing virtual networks.

And we ended this lesson discussing vSwitches and vRouters, two types of virtual devices.

4.2.2 Virtualization Management Facts

This lesson covers the following topics:

- Virtualization

- Virtual network

- Virtual security

- Virtual devices

- Application virtualization and containers

Virtualization

Virtualization is a technology that creates a software-based representation of a certain component. The virtual component can be an application, network interface card (NIC), server, or something else. A virtual network is a computer network consisting of virtual and physical devices.

Virtual devices save organizations money. Virtual machines (VMs) help organizations centralize workloads and manage assets without purchasing costly equipment. By using less physical storage space, a company can have at least twice as many devices in a network because they only pay for a small space in a data center.

Hypervisor

The components within a virtualized server appear the same as they would in a physical server. The fundamental difference of hardware components in a virtual server is that they are presented to the operating system by way of a hypervisor. The hypervisor abstracts the details of the physical server upon which the virtual machine runs. By using a hypervisor, a virtual machine has the potential to run, essentially unmodified, on any other physical server on which the hypervisor is installed. The hypervisor presents the same hardware components to the virtual machine by translating the differences between the physical hardware and the hypervisor-defined components the virtual machine expects. Doing this not only allows one physical server to run multiple virtual machines simultaneously, but also allows for virtual machines to move from one physical system to another—an essential capability for supporting high-availability and business continuity requirements. Furthermore, due to the fact that the hypervisor-defined hardware components are managed via software, components can be changed without the need for in-person maintenance. In fact, when using enterprise-grade hypervisors, components can be changed (such as adding memory and processors) without experiencing any maintenance downtime and/or through fully automated and demand-driven processes.

Hypervisors fall into two major categories: Type I and Type II.

| Type | Description |

|---|---|

| Type I | Used in an enterprise setting and are purpose-built, lean, and efficient. To look at the console of a server running a Type I hypervisor would yield a screen with a message directing the observer to use special-purpose, remote management tools to interact with it. These management tools provide a graphical user interface containing details of all of the different servers running the hypervisor (and configured to operate in logical groups) as well as the means to configure/update/change the configuration of the hypervisor and/or VMs running on it. |

| Type II | These hypervisors are added to a full-featured operating system. Hypervisor capability can be added to many modern operating systems either as an integrated feature or as a third-party software add-on. In this way, a Type II hypervisor is simply an additional service provided by the operating system and co-exists with any other services provided by it. In practical terms, Type II hypervisors are useful for building labs for testing and software analysis, although they can be used to run virtualized servers that support an organization in the same way as a Type I hypervisor can. |

Virtual Network

Virtual network software makes it easy to deploy and manage network services and network resources. Important facts about virtual networks include the following:

- VMs can support an unlimited number of virtual networks.

- You can connect an unlimited number of VMs to a virtual network.

- A virtual network (including all of its IP addresses, routes, network appliances, etc.) appears as if it is running directly on the physical network.

- The servers connected to a virtual network operate as if they are running directly on the physical network.

- Multiple virtual networks can be associated with a single physical network adapter.

- The physical networking devices are responsible for forwarding packets.

- The physical devices are partitioned into one or more virtual devices, depending on network necessity and the device capability.

- When setting up a new virtual device, you define how much physical device capability each partition will have. This means that one physical server could act as two or three VMs that work separately from one another and have their own specifications.

Managing the virtual parts of network infrastructure is like managing the physical parts of a network infrastructure. The following table describes two methods you can use for virtualization, virtual desktop infrastructure and containerization.

| Method | Description |

|---|---|

| Virtual desktop infrastructure (VDI) | When a VDI boots, it starts a minimal operating system for a remote user to access.

|

| Containerization | Containerization is a hypervisor substitute.

|

Virtual Security

Because administration takes place at both the VM and hypervisor levels, there are additional security responsibilities to consider.

| Responsibility | Description |

|---|---|

| Secure the host platform | A VM uses its physical host for processing.

|

| Establish secure procedures | Develop procedures for creating and managing VMs.

|

| Secure virtual networks | Best security practices for virtual networks include:

|

Virtual Devices

The following table describes virtual networking devices you can use to create a more secure network design.

| Device | Description |

|---|---|

| vSwitch | A vSwitch is software that facilitates the communication between virtual machines by checking data packets before moving them to a destination. A vSwitch may be software installed on the virtual machine or it may be part of the server firmware. |

| vRouter | A vRouter is software that performs the tasks of a physical router. Because virtual routing frees the IP routing function from specific hardware, you can move routing functions around a network. |

| Virtual firewall appliance (VFA) | A VFA is software that functions as a network firewall device that provides packet filtering and monitoring. The VFA can run as a traditional software firewall on a virtual machine. |

Application Virtualization and Containers

With application virtualization, rather than run the whole desktop environment, the client accesses an application hosted on a server and essentially streams the application from the server to the client. The end result is that the application simply appears to run locally just like any other application. Popular vendor products include Citrix XenApp (formerly MetaFrame/Presentation Server), Microsoft App-V, and VMware ThinApp. These solution types are often used with HTML5 remote desktop apps (referred to as "clientless") because users can access them through ordinary web browser software.

Understand the following important facts about application cell/container virtualization:

- Application cell/container virtualization dispenses with the idea of a hypervisor and instead enforces resource separation at the operating system level.

- The OS defines isolated cells for each user instance to run in.

- Each cell or container is allocated CPU and memory resources, but the processes all run through the native OS kernel.

- These containers may run slightly different OS distributions but cannot run guest OSs of different types (you could not run Windows or Ubuntu in a Red Hat Linux container, for instance).

- The containers might alternatively run separate application processes, in which case the variables and libraries required by the application process are added to the container.

One of the best-known container virtualization products is Docker ( docker.com ). Containerization underpins many cloud services. In particular, it supports microservices and serverless architecture. Containerization is also being widely used to implement corporate workspaces on mobile devices.

Description

The chart on the left shows 3 horizontal blocks stacked one above the other. From bottom to top, they are labeled Server, Host OS, and Type 2 Hypervisor. Three spaced-out vertical bars are stacked on top of the Type 2 Hypervisor block and represent the VM. Each stack consists of three sections. From bottom to top, the first section of each stack is labeled Guest OS and the second section is labeled Bins/Libs. The top sections of the three stacks are labeled App A, App A prime, and App B, respectively. The chart on the right shows three horizontal blocks stacked one above the other. From bottom to top, they are labeled Server, Host OS, and Docker Engine. There are two rows of blocks stacked above the Docker Engine that represent the Container. The first row above the Docker Engine is divided into two horizontal blocks each labeled Bins/Libs. The next row consists of App A and App A prime bars over the Bins/Libs section on the left and App B and 4 App B prime bars over the Bins/Libs section on the right.

4.2.3 Cloud Computing

Click one of the buttons to take you to that part of the video.

Cloud Computing 00:00-00:27 More and more networks are shifting to cloud-based services to handle their needs. Chances are you've heard about the cloud. It's used in almost every conversation about networking or the internet. But what exactly is the cloud? Well, it's a lot of different things, and a lot of them have been around for a while.

In this lesson, I'm going to cover what the cloud is, cloud service models, and cloud deployment models as well.

Cloud Basics 00:27-03:04 In a traditional network, you would have your switches, routers, servers, and workstations all connected to each other and to the internet. Each of your workstations would have all the necessary applications and hardware components installed on them, letting them carry out their daily tasks. And each server would have a single OS installed and would provide a specific function—think file servers or DHCP servers.

But the cloud changes how a traditional network model works. For example, let's say these workstations need to perform a lot of calculations. In a traditional networking model, each of these workstations would need the necessary software and hardware to carry out these tasks. That can get expensive. What the cloud does is outsource these tasks to other machines, which are either located on the same network or provided by a third party via the internet.

As you can see, the cloud provides the computing resources and delivers them through the network or over the internet. You can perform calculations, store data, or network resources together.

The cloud name itself comes from the cloud-shaped symbol typically used to signify the internet or a complex network system in diagrams.

For something to be considered a true cloud service, it needs to have five characteristics, which have been determined by the National Institute of Standards and Technology, or NIST. First, it needs to be an on-demand self-service, meaning users must be able to dynamically obtain resources. Second, it needs to be provided over a network, typically the internet, and be available on multiple platforms. In other words, it needs to be available on mobile phones, tablets, laptops, and workstations. This is called broad network access.

Third, it needs to have resource pooling. This means multiple users can use the same resources. These resources can be physical or virtual, but they need to be able to scale dynamically according to demand.

For example, if a server suddenly gets a thousand connections, it needs the ability to provision additional computing power or offload clients to different VMs or servers.

Fourth, the service needs to have rapid elasticity. This means the services can seamlessly provision resources and scale resource usage back depending on demand. And last of all, the fifth characteristic is what's known as a measured service. This means that resources can be controlled and optimized automatically. This also means that the service provider can collect information about the service.

Cloud service providers offer different service models depending on a network's needs. I'll go into some of these cloud service models in depth.

Cloud Service Models 03:04-03:32 But before we dive into the different cloud services, I want to point out that the name used to reference almost all cloud services begins with the name of the service itself and ends with the phrase as a service. And when we reference them, the name is typically abbreviated. For our examples, let's use Desktop as a Service and Banking as a Service. Desktop as a Service would be DaaS, while Banking as a Service would be BaaS. This is a quick way to identify a cloud service.

Infrastructure as a Service (IaaS) 03:32-04:50 The first service I'll show you is called Infrastructure as a Service, or IaaS. With IaaS, the cloud service provider hosts the infrastructure hardware, such as servers, storage, or network hardware. End users connect to resources through the internet or other wide area network connections, or WAN connections.

For example, instead of being required to install multiple file servers with redundant hard drives in your server room, you can pay a company for storage space on their servers. This eliminates the need to maintain your own local file servers. Or let's say you need to process large amounts of data or compile and test applications. Instead of needing the hardware infrastructure on site, you can outsource this to an IaaS company, such as Amazon Web Services, or AWS. The neat thing with IaaS is that you can scale up as needed. Whenever more resources are needed, you just need to let the cloud provider know and everything can be immediately provided.

One thing to know about using IaaS is that it only provides the hardware resources. It's still your job to handle all the configuration and interfacing with the cloud infrastructure. This is where Platform as a Service comes in. Platform as a Service, or PaaS, provides all the same services as IaaS but also provides the configuration, management, and setup of the cloud platform.

Platform as a Service (PaaS) 04:50-05:17 For example, it handles installing operating systems, connecting virtual networks, and creating an entire environment that's ready for you to use immediately. One common use of PaaS is for software developers. Many companies that offer PaaS have packages that are specifically designed for developing, testing, and deploying software programs. Like IaaS, PaaS provides scalability and flexibility, and it can grow or shrink with your organization's needs.

Function as a Service (FaaS) 05:17-05:40 Similar to PaaS is Function as a Service, or FaaS. With FaaS, the cloud provider automatically executes a piece of code known as a function. The function is written to be responsible for a specific task, such as authenticating a user, carrying out a spell check on a document, or some other small, specific task. FaaS is often referred to as a serverless architecture.

Software as a Service (SaaS) 05:40-06:49 Next, we have Software as a Service, or SaaS. SaaS is designed for the average end user. It provides end users with the applications that they need to perform their day-to-day work. Instead of installing the program locally, users access the program through their web browser. Web-based email, Google Docs, and Microsoft's Office 365 are all examples of SaaS.

Some SaaS providers charge a monthly or annual fee. But most times you pay for only the applications that you need. For example, if a user needs a word processing application for nine hours a day, that's how much they pay for. If another user needs the same application for only three hours a month, they pay much less.

SaaS comes in two forms—simple multi-tenancy and fine-grained multi-tenancy. With simple multi-tenancy, each cloud service customer has their own dedicated resources segregated from other customers' resources. Essentially each user has their own cloud. With fine-grained multi-tenancy, the computing resources, applications, and other cloud resources are all shared. But each user's data is segregated so other customers can't access it.

Security as a Service (SECaaS) 06:49-07:57 The final cloud service to know is Security as a Service, or SECaaS. You might be asking why you would want to outsource your security services to the cloud if you're already a security professional. Well, this isn't what Security as a Service does. In one way, SECaaS is similar to SaaS in that it provides a subscription model for applications and software. But instead of being used for productivity on workstations, the applications and software are specific to organizational security.

For example, you might subscribe to a Security as a Service system that provides a high-level antivirus detection service that includes continuous antivirus updates. Other services provided by SECaaS include DDoS protection, intrusion detection, and authentication services. One of the most common Security as a Service providers is CloudFlare.

Security as a Service can sometimes be much more cost-effective for an organization than having to purchase all the necessary hardware equipment and personnel to properly protect a network from viruses, malware, and instruction. But it's still a necessity to have an on-site security professional.

Infrastructure as Code (IaC) 07:57-08:31 Implementing a cloud service can help alleviate many of the pains of developing and building your own network and infrastructure, but it still needs to be managed by skilled technicians.

To make the management easier, you can use Infrastructure as Code, or IaC. IaC lets you manage your IT infrastructure with configuration files. This can be done on both local and cloud networks. With IaC, your systems' configuration is a code file that can be easily edited and applied across networks. Using IaC is similar to how programmers use a repository to work on code as a team.

Cloud Deployment Models 08:31-08:43 How users access cloud services is known as the cloud deployment model. There are four main models in use today. They're the public cloud, private cloud, community cloud, and hybrid cloud.

Public Cloud 08:43-08:50 The public cloud is a service that's provided to the public. This can be free of charge or paid. Dropbox and Google Drive are examples.

Private Cloud 08:50-09:00 A private cloud service consists of an infrastructure designed and operated solely for a single organization. This can be managed either internally or by a third party.

Community Cloud 09:00-09:10 Organizations sometimes share infrastructure that they all have in common, such as an application or regulatory requirements. This is known as a community cloud.

Hybrid Cloud 09:10-09:17 Finally, there's the hybrid cloud. Hybrid clouds are just combinations of private, community, or public clouds used for different purposes.

Summary 09:17-09:40 That's it for this lesson. In this lesson, we covered the basics of what the cloud is and how it works. Then we went over the different cloud models, which include IaaS, PaaS, SaaS, and SECaaS. And finally, we looked at the different deployment models. These include public, private, community, and hybrid clouds.

4.2.4 Cloud Computing Facts

Many organizations are shifting their networks to cloud-based services. Security specialists must fully understand the cloud and the special security risks that cloud services pose.

This lesson covers the following topics:

- Cloud basics

- Cloud deployment models

Cloud Basics

Cloud computing is a combination of software, data access, computation, and storage services provided to clients through the internet. The term cloud is a metaphor for the internet based on the basic cloud drawing used to represent the telephone network.

The term is now used to describe the internet infrastructure in computer network diagrams. The National Institute of Standards and Technology (NIST) has determined that for a service to be considered a true cloud service, it must meet the following five characteristics:

- Be an on-demand self-service - Users can dynamically obtain resources.

- Provide broad network access - The cloud service is provided over a network, either a LAN or the internet. The access is available on multiple platforms, such as mobile phones, tablets, laptops, and workstations.

- Provide resource pooling - Multiple users can use the same resources. These resources can be physical or virtual, but they must scale dynamically according to demand.

- Provide rapid elasticity - The services can seamlessly provision resources and scale resource usage depending on demand.

- Allow measured service - Resources can be controlled and optimized automatically. In addition, the service provider can collect information about the service.

Cloud Deployment Model

Cloud services can be deployed in several ways, including the following:

| Type | Description |

|---|---|

| Public cloud | A public cloud can be accessed by anyone.

|

| Private cloud | A private cloud provides resources to a single organization.

|

| Community cloud | A community cloud is designed to be shared by several organizations that all have some infrastructure in common such as an application or regulatory requirements.

|

| Hybrid cloud | A hybrid cloud is a combination of public, private, and community cloud resources from different service providers. The goal behind a hybrid cloud is to expand the functionality of a given cloud service by integrating it with other cloud services. |

4.2.5 Serverless Computing

This lesson covers the following topics:

- Serverless computing

- Serverless computing risks

- Cloud service models

Serverless Computing

Serverless is a modern design pattern for service delivery. It is strongly associated with modern web applications—notably Netflix ( aws.amazon.com/solutions/case-studies/netflix-and-aws-lambda )—but providers appear with products to completely replace the concept of the corporate LAN. With serverless, all the architecture is hosted within a cloud, but unlike traditional virtual private cloud (VPC) offerings, services such as authentication, web applications, and communications aren't developed and managed as applications running on VM instances within the cloud. Instead, the applications are developed as functions and microservices, each interacting with other functions to facilitate client requests. When the client requires some operation to be processed, the cloud spins up a container to run the code, performs the processing, and then destroys the container.

Billing is based on execution time rather than hourly charges. Examples of this type of service include AWS Lambda ( aws.amazon.com/lambda ), Google Cloud Functions ( cloud.google.com/functions ), and Microsoft Azure Functions ( azure.microsoft.com/services/functions ).

Serverless platforms eliminate the need to manage physical or virtual server instances, so there is little to no management effort for software and patches, administration privileges, or file system security monitoring. There is no requirement to provision multiple servers for redundancy or load balancing. As all of the processing is taking place within the cloud, there is little emphasis on the provision of a corporate network. The service provider manages this underlying architecture.

The principal network security job is to ensure that the clients accessing the services have not been compromised in a way that allows a malicious actor to impersonate a legitimate user. This is a particularly important consideration for developer accounts and devices used to update the application code underpinning the services. These workstations must be fully locked down, running no applications or web code other than those necessary for development.

Serverless architecture depends heavily on the concept of event-driven orchestration to facilitate operations. For example, multiple services are triggered when a client connects to an application. The application needs to authenticate both the user and device, identify the location of the device and its address properties, create a session, load authorizations for the action, use application logic to process the action, read or commit information from a database, and write a log of the transaction. This design logic differs from applications written in a "monolithic" server-based environment.

Serverless Computing Risks

Serverless does have considerable risks. As a new technology, use cases and best practices continue to mature, especially concerning security. Keep in mind some of these risks when deciding how you will use serverless computing in your organization:

- There is a critical and unavoidable dependency on the service provider, with limited options for disaster recovery should that service provision fail.

- A serverless provider draws on multiple sources to provide their services. This will increase the attack surface.

- Serverless computing is particularly susceptible to Denial of Service attacks if there are misconfigurations in the timeout settings between the host and a function or insecure configurations in service provider's settings.

- Because the architecture of serverless applications can contain hundreds of serverless functions, there is a risk that one broken authentication can affect multiple functions.

Cloud Service Models

Cloud service providers offer a variety of service models depending on a network’s needs. The following table describes common models.

| Cloud Service Model | Description |

|---|---|

| Infrastructure as a Service (IaaS) | IaaS delivers infrastructure to the client, such as processing, storage, networks, and virtualized environments.

|

| Platform as a Service (PaaS) | PaaS provides the totality of the configuration, management, and setup of the cloud platform. This includes:

|

| Function as a Service (FaaS) | With FaaS, the cloud provider automatically executes a piece of code known as a function, or is responsible for a specific task such as authenticating a user, spell checking a document, or performing a small, specific task. Key things to remember is that FaaS:

|

| Software as a Service (SaaS) | SaaS is designed for the average end user. It provides users with the applications needed to perform day-to-day work. Instead of installing the program locally, users access the program through a web browser. Web-based email, Google Docs, and Microsoft's Office 365 are all examples of SaaS. Some SaaS providers charge a monthly or annual fee for access. These fees can also be based usage time. SaaS comes in two forms:

|

| Security as a Service (SECaaS) | SECaaS providers integrate services into a corporate infrastructure. The applications and software are specific to organizational security. Key points regarding SECaaS are:

Security as a Service is also abbreviated as SaaS, although this can easily be confused with Software as a Service. |

| Infrastructure as Code (IaC) | IaC is the managing and provisioning of the network and cloud systems through code/scripts instead of through manual processes. IaC:

|

4.2.6 Network Architectures

Click one of the buttons to take you to that part of the video.

Network Architecture 00:00-00:39 When a large office building is constructed, the building is divided into "fire compartments."

This compartmentalization is designed to limit the damage if a fire breaks out by keeping the damage contained in the compartment where it started. The same concept of compartmentalization is applied in cybersecurity, but we refer to it as segmentation.

Network segmentation divides a large network into smaller networks, or subnetworks, to protect against malicious traffic. It's also used to improve performance. Segmentation helps control access and limit damage by implementing control points that decide whether traffic is allowed to pass.

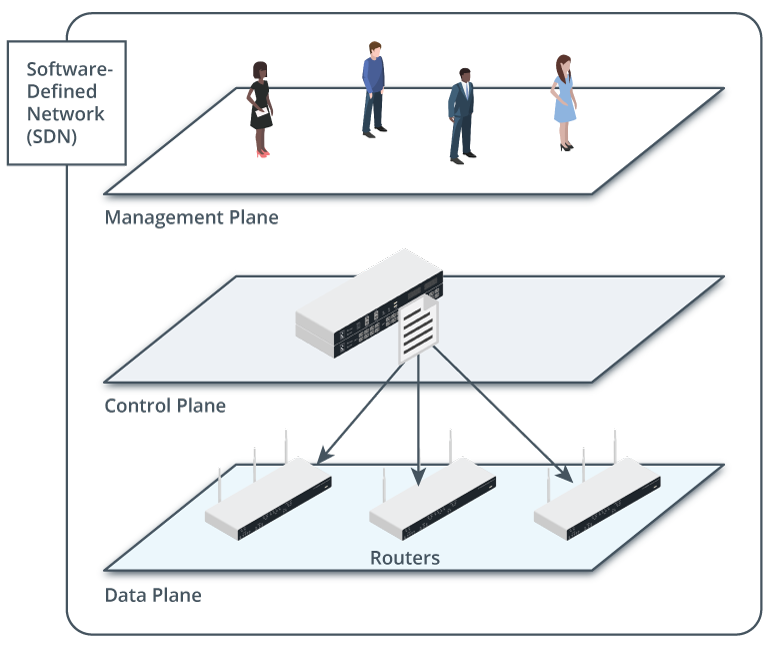

Software-defined Networks 00:39-02:16 Software-defined networks, or SDNs, extend the functionality and control provided by network segmentation through greater flexibility. SDNs replace physical network devices, like routers and switches,

with a virtual control plane that makes all decisions about traffic management.

As networks become more complex—perhaps involving thousands of physical and virtual computers and appliances—it becomes more challenging to implement network policies. SDN architecture saves security administrators time through centralized configuration and control.

It allows for fully automated deployments of network links, appliances, and servers.

We can think of software-defined networks as operating in three planes. The first plane is the control plane, where the decisions are made about how traffic should be prioritized and secured and where it should be switched. The second plane can be called a data plane. Here the actual switching and routing of traffic occurs, as well as the imposition of access control lists for security. The management plane is the last plane and includes monitoring traffic conditions and network status. Let's look at an example. A software-defined networking application defines policy decisions on the control plane. These decisions are then implemented on the data plane by a network controller application, which interfaces with the network devices using APIs. The interface between the SDN applications and the SDN controller is described as the "northbound" API, while the interface between the controller and appliances is the "southbound" API. And all of this is being monitored by the management plane.

Zero-Trust Architecture 02:16-04:36 Now that we've looked at how segmentation with SDNs reduces the risks associated with managing large and complicated networks, let's look at another important component of reducing risks

through a Zero Trust architecture. Zero Trust architectures assume that nothing should be taken for granted and that all network access must be continuously verified and authorized. Any user, device, or application seeking access must be authenticated and verified. Zero Trust differs from traditional security models based on simply granting access to all users, devices, and applications contained within an organization's trusted network.

Organizations' increased dependence on information technology has driven requirements for services to be always on, always available, and accessible from anywhere.

Cloud platforms have become an essential component of technology infrastructures, driving broad software and system dependencies and widespread platform integration. The distinction between inside and outside is essentially gone.

The opportunity for breach is very high for an organization leveraging remote workforces, running a mix of on-premises and public cloud infrastructure, and using outsourced services and contractors.

Staff and employees are using computers attached to home networks, or worse, unsecured public Wi-Fi. Critical systems are accessible through various external interfaces and run software developed by outsourced contracted external entities. In addition, many organizations design their environments to accommodate Bring Your Own Device.

Zero Trust has many components and technologies it uses within an organization's network to help protect it from this increased risk. Let's briefly go through some of these essential elements.

Zero Trust architecture implements network and endpoint security by controlling access to applications, data, and networks.

It uses identity and access management, or IAM, to ensure that only verified users can access systems and data.

Zero Trust restricts network traffic to only legitimate requests through policy-based enforcement. It also manages access to cloud-based applications, services, and data through cloud security.

Zero Trust also relies on network segmentation to control access from trusted locations, and it provides threat detection and prevention by identifying and preventing attacks against the network and the systems connected to it.

Secure Access Service Edge 04:36-05:57 In the past, networks were mainly defined by location.

Zero Trust doesn't define security through network boundaries but through resources such as users, services, and workflows. Microsegmentation plays a critical part in providing this level of isolation.

Secure Access Service Edge, or SASE, a cloud-based architecture that combines Zero Trust security services with networking services, provides the protection of a secure access platform with the agility of a cloud-delivered security architecture.

SASE streamlines the process of granting secure access to all users, regardless of location.

SASE is a confluence of Wide Area Networks, Network Security Services, such as CASB, Firewall as a Service, and Zero Trust, in a cloud-delivered service model.

SASE integrates multiple services, like network access control, web security gateways, and virtual private network connections. It also offers advanced features such as IAM. IAM facilitates single sign-on across various applications.

SASE is an important architectural model designed to protect data in modern infrastructures that depend heavily on cloud platforms. By combining networking and security functions into a single cloud-hosted service, SASE eliminates the need for dedicated hardware and facilitates remote management of networks and systems.

Summary 05:57-06:27 Well, that's it for this lesson. In this lesson, we talked about using software-defined networks to create network segmentation. Then, we looked at Zero Trust architecture and how it uses technologies and network components to protect networks against increased risk created by more diverse network environments. We finished this lesson, by reviewing how Secure Access Service Edge, or SASE, integrates various networking and security services to provide cloud-based security in a way that works with an ever-changing network environment.

4.2.7 Software-Defined Networking (SDN)

This lesson covers the following topics:

- Software-defined networking (SDN) segmentation

- SDN model

Software-Defined Networking (SDN) Segmentation

Network segmentation divides a large network into smaller networks, or subnetworks, to improve security and performance. Segmentation helps to control access and limit the spread of malicious traffic by implementing control points designed to decide whether traffic should be allowed to pass. By separating traffic flows, incident responders can quickly contain malicious traffic within one part of the network to prevent its spread throughout the entire network. Software-defined networks extend the functionality and control provided by network segmentation by providing greater flexibility. For example, a software-defined network makes implementing networks containing only a single device much more manageable. Consider the three segmentation arrangements below.

- Physical segmentation involves separating different network zones using separate switches, routers, and cabling.

- Virtual segmentation involves using software to create virtual networks within an existing network, allowing different types of traffic to be separated and isolated. Virtual segmentation is associated with cloud computing environments, where networks are defined using features provided by the virtualization platform.

- Logical segmentation involves using software to create logical divisions within a single network using virtual LANs, or VLANs.

SDN Model

Software-defined networking (SDN) abstracts physical network devices, like routers and switches, replacing them with a virtual control plane that makes all decisions regarding traffic management. SDN allows for building cloud-based networks using virtualized equivalents of physical routers, firewalls, and other network devices used in on-premises networks. SDN architectures support security applications because the central controller allows data planes to be rapidly reprogrammed using automated provisioning and policy-based controls.

As networks become more complex—perhaps involving thousands of physical and virtual computers and appliances—it becomes more challenging to implement network policies, such as ensuring security and managing traffic flow. SDN architecture saves network and security administrators time and complexity inherent in individually configuring appliances with settings required to enforce security policies. It also allows for fully automated deployment (or provisioning) of network links, appliances, and servers. Centralized configuration and control make SDN essential to the latest automation and orchestration technologies.

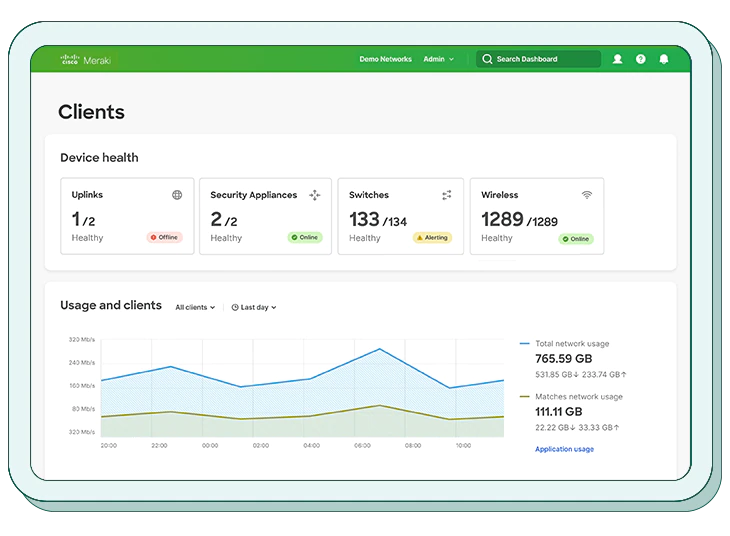

Description

A header at the top reads Clients. The dashboard is divided into two sections. The first section titled device has four blocks labeled uplinks, security appliances, switches, and wireless. The second section titled usage and clients shows a line graph that plots data usage over time.

Cisco Meraki Management Dashboard. (Screenshot courtesy of Cisco Systems, Inc. Unauthorized use not permitted. https://meraki.cisco.com/products/meraki-dashboard/)

With a vast array of devices to manage and configure, it is effective to use an abstracted model to define how the network operates. In a model such as this, network functions are divided into three "planes":

- Control plane : Makes decisions about how traffic should be prioritized and secured, and where it should be switched.

- Data plane : Handles the actual switching and routing of traffic and imposition of access control lists (ACLs) for security.

- Management plane: Monitors traffic conditions and network status.

A software-defined networking (SDN) application can be used to define policy decisions on the control plane. These decisions are then implemented on the data plane by a network controller application, which interfaces with the network devices using APIs. The interface between the SDN applications and the SDN controller is described as the "northbound" API, while between the controller and appliances is the "southbound" API. SDN can be used to manage compatible physical appliances but also virtual switches, routers, and firewalls.

This architecture reduces the risks associated with managing a large and complicated network infrastructure. It also allows for fully automated deployment (or provisioning) of network links, appliances, and servers. These features make SDN a critical component driving the adoption of automation and orchestration technologies.

Description

A horizontal plane labeled management plane has a group of four people. A horizontal plane below this is labeled control plane has a control device with a file. Arrows from the file point to 3 routers on a horizontal plane labeled data plane below the control plane.

Data plane devices managed by a control plane device and monitored by a management plane. (Images © 123RF.com)

4.2.8 Zero Trust Architectures

This lesson covers the following topics:

- The emerging need for Zero Trust Architecture (ZTA)

- Trends driving deperimeterization

- Key benefits of Zero Trust Architecture

- Secure Access Service Edge (SASE)

The Emerging Need for Zero Trust Architecture (ZTA)

Organizations' increased dependence on information technology has driven requirements for services to be always on, always available, and accessible from anywhere. Cloud platforms have become an essential component of technology infrastructures, driving broad software and system dependencies and widespread platform integration. The distinction between inside and outside is essentially gone. For an organization leveraging remote workforces, running a mix of on-premises and public cloud infrastructure while using outsourced services and contractors, the opportunity for breach is very high. Staff and employees are using computers attached to home networks, or worse, unsecured public Wi-Fi. Critical systems are accessible through various external interfaces and run software developed by outsourced, contracted external entities. In addition, many organizations design their environments to accommodate Bring Your Own Device (BYOD).

As these trends continue, implementing Zero Trust Architecture will become even more critical. This model assumes that nothing should be taken for granted and that all network access be continuously verified and authorized. Any user, device, or application seeking access must be authenticated and verified. Zero Trust differs from traditional security models that are based on simply granting access to all users, devices, and applications contained within an organization's trusted network.

The NIST SP 800-207 Zero Trust Architecture defines Zero Trust as "cybersecurity paradigms that move defenses from static, network-based perimeters to focus on users, assets, and resources." A Zero Trust Architecture can protect data, applications, networks, and systems from malicious attacks and unauthorized access more effectively than a traditional architecture by ensuring that only necessary services are allowed and only from appropriate sources. Zero Trust enables organizations to offer services based on varying levels of trust, such as providing more limited access to sensitive data and systems.

For more details, the NIST SP 800-207 is available via https://csrc.nist.gov/publications/detail/sp/800-207/final

Trends Driving Deperimeterization

- The cloud —Enterprise infrastructures are typically spread between on-premise and cloud platforms. In addition, cloud platforms may be used to distribute computing resources globally.

- Remote work —More and more organizations have adopted either part-time or full-time remote workforces. This remote workforce expands the enterprise footprint dramatically. In addition, employees working from home are more susceptible to security lapses when they connect from unsecure locations and use personal devices.

- Mobile devices —Modern smartphones and tablets are often used as primary computing devices as they have ample processer, memory, and storage capacity. More and more corporate data is accessed through these devices as their capabilities expand. Mobile devices and their associated operating systems have varying security features, and many devices are not supported by vendors shortly after release, meaning they cannot be updated or patched. In addition, mobile devices are often lost or stolen.

- Outsourcing and contracting —Support arrangements often provide remote access to external entities, and this access can often mean that the external provider's network serves as an entry point to the organizations they support.

- Wireless networks (Wi-Fi) —Wireless networks are susceptible to an ever-increasing array of exploits, but oftentimes wireless networks are open and unsecured or the network security key is well known.

Key Benefits of Zero Trust Architecture

- Greater security —All users, devices, and applications are authenticated and verified before network access.

- Better access controls —More stringent limits are put in place regarding who or what can access resources and from what locations resources can be accessed.

- Improved governance and compliance —There are limits on data access and greater operational visibility on user and device activity.

- Increased granularity —Users are granted access to what they need when they need it.

A Zero Trust Architecture requires a thorough understanding of the many components and technologies used within an organization's network. The following list outlines the essential components of ZTA:

- Network and endpoint security —Controls access to applications, data, and networks.

- Identity and access management (IAM) —Ensures that only verified users can access systems and data.

- Policy-based enforcement —Restricts network traffic to only legitimate requests.

- Cloud security —Manages access to cloud-based applications, services, and data.

- Network visibility —Analyzes network traffic and devices for suspicious activity.

- Network segmentation —Controls access to sensitive data and capabilities from trusted locations.

- Data protection —Controls and secures access to sensitive data, including encryption and auditing.

- Threat detection and prevention —Identifies and prevents attacks against the network and the systems connected to it.

Secure Access Service Edge (SASE)

Zero Trust does not define security through network boundaries, but instead via resources such as users, services, and workflows. Microsegmentation plays a critical part in providing this level of isolation, and Secure Access Service Edge (SASE) combines the protection of a secure access platform with the agility of a cloud-delivered security architecture. SASE offers a centralized approach to security and access, providing end-to-end protection and streamlining the process of granting secure access to all users, regardless of location. SASE is a confluence of wide area networks (WANs) and network security services, such as a cloud access security broker (CASB) and Firewall as a Service (FWaaS) in a cloud-delivered model. Zero Trust and SASE are important architectural models designed to protect data in modern infrastructures that depend heavily on cloud platforms.

SASE aims to simplify the managing of multiple network and security services by combining networking and security functions into one single cloud-hosted service. SASE eliminates the need for dedicated hardware, which allows security teams to quickly adapt to changes while maintaining secure access to any user from any device. SASE also facilitates remote management of networks and systems. It helps to integrate multiple network and security services, such as network access control (NAC), web security gateways, and virtual private network (VPN) connections.

While SASE simplifies network and security services, it also offers advanced features such as identity and access management, secure web gateways, and Zero Trust network access, all of which are designed to protect an organization's data and applications while providing uninterrupted access to users. Below are some further expounding points about these features.

- Identity and access management (IAM) —Allows businesses to define user rights (such as login credentials, privileges, and policies) and apply those settings to all users. IAM also facilitates single sign-on (SSO) across various applications, streamlining access management and reducing administrative overhead. SASE supports many IAM solutions, allowing organizations to leverage existing tools and services.

- Secure web gateways —Performs URL filtering, content scanning, and malware protection. SASE supports many web gateways, including Cisco Umbrella, Cisco Web Security Appliance, F5 BIG-IP, Palo Alto Networks®, and AWS WAF.

- Zero Trust network access —Monitors network activities and blocks unauthorized traffic. SASE supports a range of Zero Trust network access solutions; one example is Cisco Advanced Malware Protection (AMP).